My original situation summarizes as follows:

- a Raspberry Pi 2 is connected to my TV-screen via HDMI

- FullHD-video-decoding and displaying in general works like charm.

- digital audio (PCM and AC3) should be embedded in the HDMI-data-stream

- my TV does not have a digital audio output but can consume the audio embedded in the stream

- my audio-decoder and -amplifier does not have a HDMI-input

- my software setup on the PI won’t allow the use of a HifiBerry-extension

What I would ideally need is something which

- takes a HDMI-input,

- splits out the digital-audio to a S/PDIF-output (optical or coaxial)

- and forwards the original HDMI-signal on a HDMI-output.

- Ideally this device is small and powered over USB (so that it doesn’t need an extra power-supply).

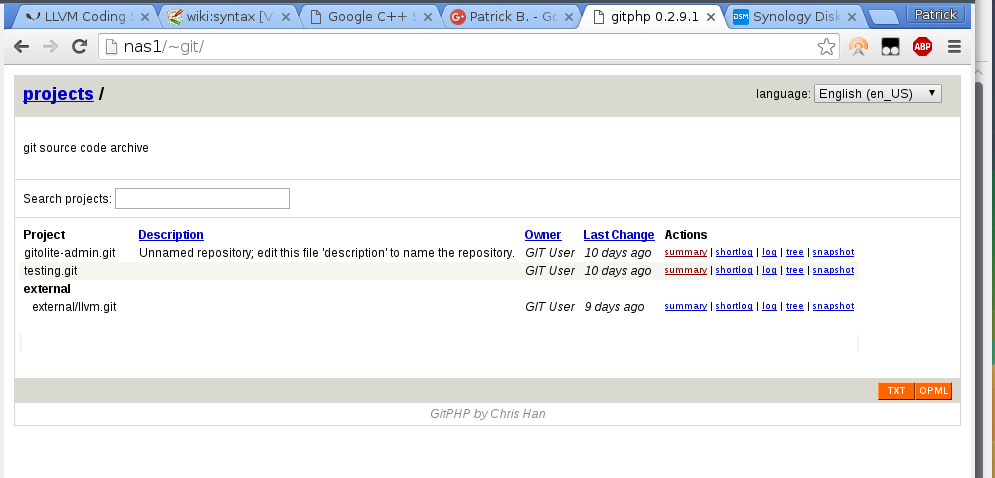

Believe it or not, this actually exists:

Self-powered HDMI-audio-splitter

It took me some time but I eventually found one on Amazon for around 25 euros. Other people have seen this kind of devices on eBay in 2014 and earlier.

Software problems

Inserting this device between the Raspi and the TV was straight forward. I just plugged everything on my running Pi and everything worked immediately – I thought. In fact there were two problems:

Problem 1: even when playing AC3-audio (2.0, 2.1 or 5.1), the Pi would only send PCM-frames.

Problem 2: after a cold-start, the Pi was not able to determine the correct resolution of my screen.

It turned out that both problems have the same root-cause:

When connecting a HDMI source to a HDMI sink (or when powering up one of them), the source gets some meta/configuration-information about the sink. It reads the so-called EDID-data. In it, among others, the source can find the supported screen-resolutions and the supported audio(-container)-formats.

Audio AC3-passthrough

Based on the list of accepted audio-formats the Raspi, or better the OpenMax-library (omx), will determine whether or not to pass-through AC3-audio (integrate it into the HDMI-data-stream). At some point in time that EDID data is read and if it does not list the capability, the software won’t even try to send AC3-data, but will decode it in software to Stereo-PCM.

But wait, now that there is the HDMI-splitter, where is the EDID-data coming from? Well, the splitter just passes through the information from my screen to the Raspi. My screen does not have a digital audio output. Most likely it tells sources that is doesn’t support anything other the PCM-audio.

Raspbian, which I’m using, has some pre-installed tools with which can be used to read and decode the EDID data. There is tvservice for reading EDID and dumping it to a file

pi@vdr-pi ~ $ /opt/vc/bin/tvservice -d edid-5.1.dat

Written 256 bytes to edid-5.1.dat

And there is edidparser for decoding the dumped .dat-file.

pi@vdr-pi ~ $ /opt/vc/bin/edidparser edid-auto.dat | grep audio

HDMI:EDID monitor support - underscan IT formats:no, basic audio:yes, yuv444:yes, yuv422:yes, #native DTD:1

HDMI:EDID found audio format 2 channels PCM, sample rate: 44|48 kHz, sample size: 16|20|24 bits

HDMI:EDID has HDMI support and audio support

This last snippet shows the parsed the EDID-data. It was as I was suspecting. Nothing else then PCM is accepted by my HDMI-sink.

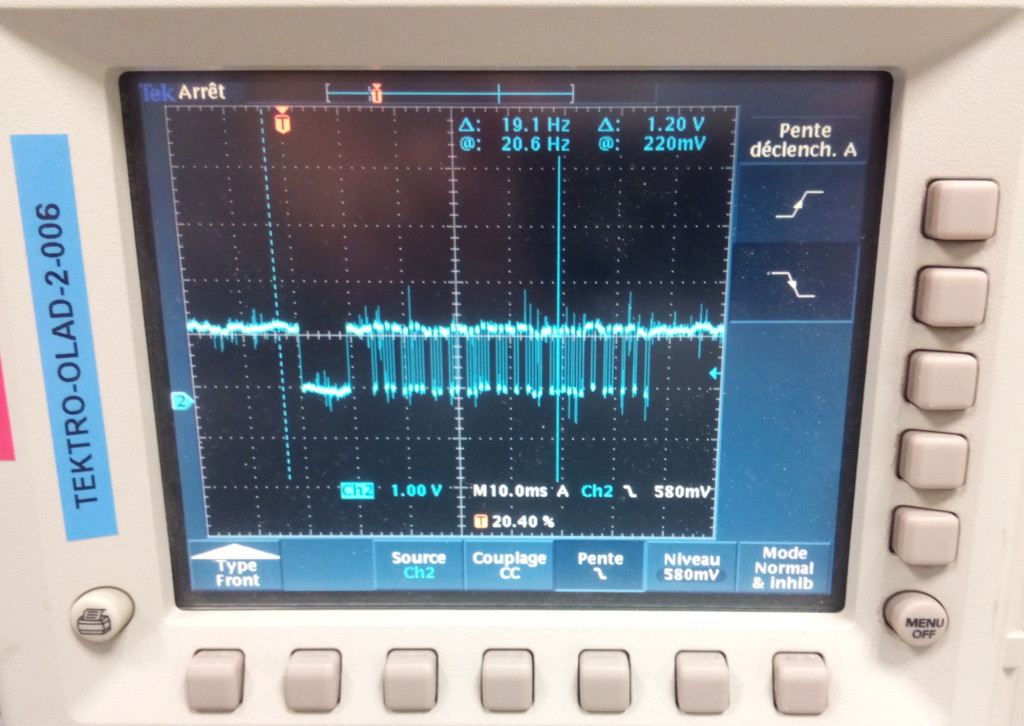

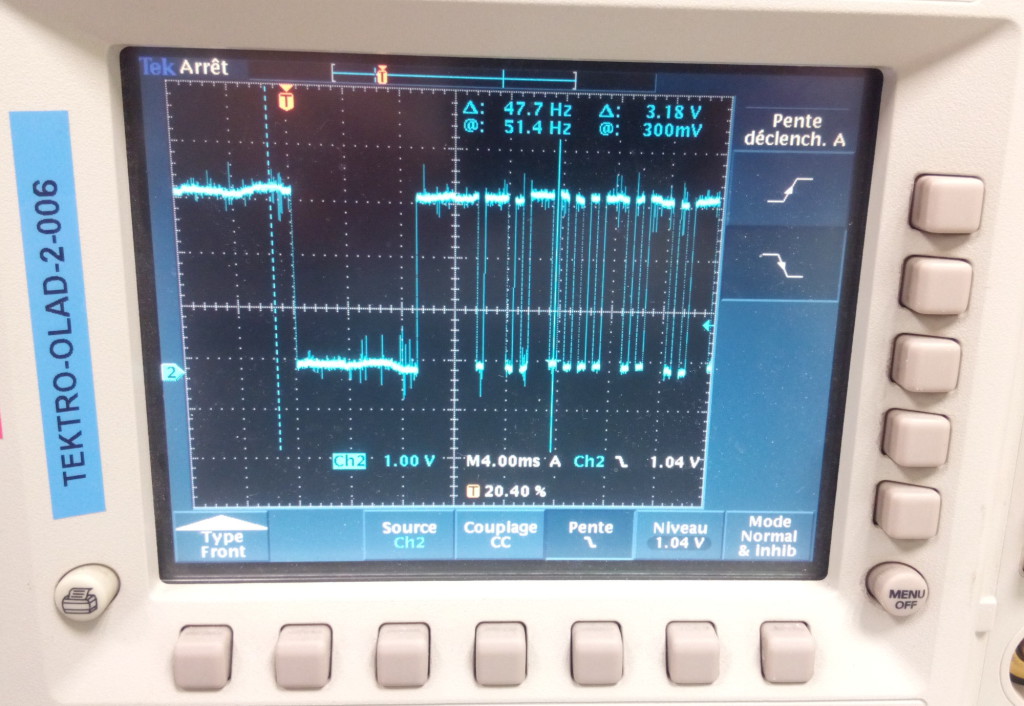

There seems to be several ways to convince the system that AC3-passthrough is possible though. The easiest for me was to use the switch provided for this reason on the HDMI-splitter. By default it is set to auto which gives the EDID seen above.

HDMI-audio-split: auto vs 5.1-mode

Putting the switch to 5.1 gives the following EDID-output and AC3-passthrough started to work, after I restarted the player-software:

pi@vdr-pi ~ $ /opt/vc/bin/edidparser edid.dat | grep audio

HDMI:EDID monitor support - underscan IT formats:no, basic audio:yes, yuv444:yes, yuv422:yes, #native DTD:1

HDMI:EDID found audio format 2 channels PCM, sample rate: 32|44|48|88|96|176|192 kHz, sample size: 16|20|24 bits

HDMI:EDID found audio format 6 channels AC3, sample rate: 32|44|48|88|96|176|192 kHz, bitrate: 1536 kbps

HDMI:EDID found audio format 6 channels DTS, sample rate: 32|44|48|88|96|176|192 kHz, bitrate: 1536 kbps

HDMI:EDID has HDMI support and audio support

The other solutions I found suggested to change some boot-parameters in /boot/config.txt . Several EDID-related values can be set to override the EDID-values delivered by the sink. For this problem the HDMI_FORCE_EDID_AUDIO setting might work. I couldn’t use it; it conflicts with the solution of my other problem. (See here for more/all config.txt-parameters)

Screen resolution

By default the Raspi will select the native resolution of a screen as per EDID-data. My splitter is powered by the Raspi, so it is not running before the Raspi boots. It seems that at the moment the Raspi reads the EDID-information the splitter has not yet read the EDID from the screen. I don’t know what kind of data is received this way, but it is not correct.

I tried setting several HDMI-parameters in the config.txt nothing made it work. Except one: I forced the Raspi to read the the EDID-data from a file instead of the sink. For that, I put the edid.dat from above (this one I got with edidparser after having set the HDMI-splitter to the 5.1 mode) into the /boot-folder and added the following line to /boot/config.txt

hdmi_edid_file=1

Now everything worked as I wanted it to be.